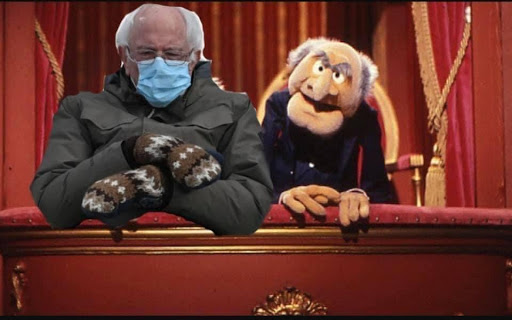

The idea for this week\’s blog post started just after the Presidential Inauguration.. . when all of a sudden Bernie Sanders memes flooded the internet. Bernie and his mittens were photoshopped into just about every landscape, movie poster, or iconic photo you can think of. Some of my personal favorites are here below:

What struck me was how rapidly and how easily this was able to happen, with readily available templates making it simple for anyone with a phone or a computer to manipulate this image. Some of the creations were almost believable, even though we knew they weren’t real.

While certainly a benign example, this situation served to highlight the ease with which digital media can be manipulated. In the wrong hands, this capability can be dangerous. It is therefore becoming increasingly essential to develop the right tools with the ability to discern if photos have been altered – or that aren’t actually “photos” at all, but rather entirely composed of computer graphics.

In our last Dataspace newsletter, we referenced the use of AI to detect text that had been generated by AI instead of written by a human, and it turns out that AI is also quite useful for recognizing the signs that a photo has been digitally altered. It\’s all about the data.

There are a couple of big players at the forefront of developing these AI based detection tools.

For example, Adobe is working in collaboration with researchers at UC Berkeley to train AI tools that are capable of detecting changes that have been made via certain features of their photo editing software.

Jigsaw (a company under the Alphabet umbrella) takes a somewhat broader approach (ie. not focused on one specific tool), and has been working to develop a platform that contains an ensemble of several different kinds of image manipulation detectors as well as one detector tool designed specifically to identify deepfakes.

Both efforts frame their work in the context of combating the spread of disinformation, and democratizing media forensics.

Similarly, both tools are still in the experimental phase, and it remains to be seen how well they can perform against photos that have been affected by multiple shares across different social media platforms. In the meantime, the best tools that we can rely on are: our own awareness that we may need to think twice about accepting the veracity of images we see online, and a mindset of approaching digital media with a more critical eye.