The last edition of our newsletter focused on some very creative applications of data science tools and methods. Most of these were lighthearted, merely reflecting the interest of the creators in their subject matter – like true scientists, they applied their skills to the questions that interested them.

In contrast, today’s newsletter delves into some of the data science “grey areas” – uses and applications that raise ethical and legal questions that need to be carefully considered.

To start off in the most general sense, we need to be aware of the variety of spaces where the questions of ethical usage of data science tools have come into play. Similarly, it is important to recognize that we may hold some problematic preconceptions that can present roadblocks to truly productive discussions of ethics and technology.

The good news is that these questions of ethics are, in fact, being raised. While certainly not all-inclusive, the below list highlights some of the most salient areas of data science usage that are currently under scrutiny:

Facial Recognition: First and foremost, this technology raises fears of government surveillance. Beyond all of the ethical concerns inherent in that topic, it is also important to urge caution in the application of facial recognition tools based on their current limitations as well as the propensity of AI systems to misclassify certain groups.

Taking this topic a few shades of grey further, the analytics company Faception claims to have developed a computer vision/machine learning tool to provide personality prediction analytics. They hope to see it applied to be able to identify terrorists or other criminals, however there are many ethical issues and potential misuses inherent in this model.

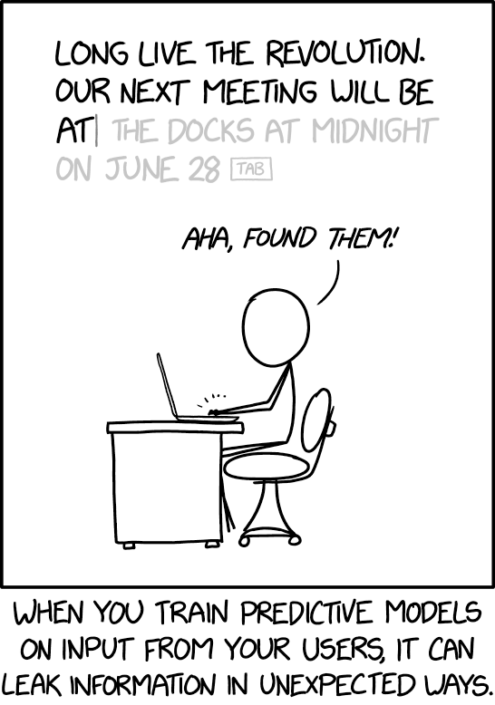

Decision Making: The implication of the term “data driven decision making” is that this process relies entirely on “neutral” data and is free from the biases that a human decision maker brings to the table. However, given the evidence that societal biases can be built into these algorithms, we need to be cautious not only with outsourcing decisions to computers but also with leaning heavily on the outputs of ML during the decision making process – particularly in fields that could have a major impact on the rights and freedoms of entire populations.

Creativity and Intellectual Property issues: As the ability of AI to produce “new” material increases, new questions arise: Can an algorithm be credited as an “inventor”? Can it be said to be violating Intellectual Property Rights if it is trained off of others’ creations? These debates are likely to continue for quite some time.

While the above topics raise questions without definitive answers at this point in time, stay tuned for our next newsletter installment where we look at some more obviously nefarious uses of data science technologies.

Until next time, Happy Coding